By: Praveen Kumar H

June 19 2023

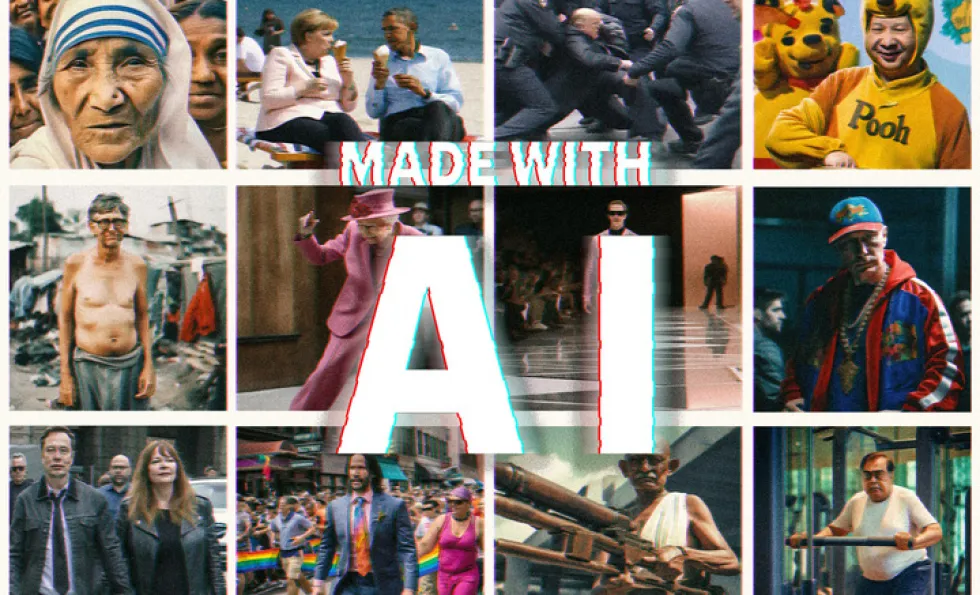

You would have certainly seen these images of Twitter top boss Elon Musk holding a humanoid go viral with a claim that he is working on creating a “robot wife.” Or these images of Indian Prime Minister Narendra Modi wearing a headgear and an extravagant costume at an event.

Do you know what’s common between these two pictures? Both have been created using artificial intelligence (AI). AI-generated images are being used as a tool to spread disinformation and fake images of politicians, celebrities, and even the Pope have been shared as real. While some of them might be funny, others can be harmful and used to spread propaganda.

Before exploring ways to detect such images, let’s also understand what generative adversarial networks (GANs) are and how they are linked to AI images.

In 2014, computer scientists at the University of Montreal proposed generative adversarial networks as a method of machine learning. Their interest was in training a computer to examine and create images. An article in Business Insider details the group’s method and explains that their system would first feed the software similar-looking images and then the software would extract those features to create their own images.

Another report in Wired mentions that the first set of GAN-generated images were hardly saleable, but this is what sparked the interest in AI-generated images. It explains that GANs involve two neural networks – one tries to generate something while the other tries to distinguish between real and fake examples.

AI image generators use GANs to create a wide variety of images, including human faces, landscapes, and objects. So, images created by GANs are often indistinguishable from real images. This makes GANs a powerful tool for creating realistic content, which is now being used to create fake imagery and even scam people.

There are several ways to do so and some of them are as simple as observation skills.

1) Look carefully

Zoom into the picture and look for inconsistencies. AI-generated images may show repeated patterns and symmetrical features that are too perfect or unnatural. For example, clouds, waves, feathers, or petals in an image have to be noticed carefully to see if they appear multiple times with the same shape and size. Similarly, doors and windows on a building might be uneven when usually they are supposed to be symmetrical and in the same shape.

2) Closely look at body parts and other features

Closely noticing the features and body parts of an AI-generated image may reveal errors and inconsistencies. For example this image of Indian Prime Minister Narendra Modi shows the two men in the background with half fingers. Modi’s skin also appears to be artificial, and the lighting on the face and hands doesn’t match.

AI image generators also struggle with eyes, teeth, and text/writing. It is oddly tricky for an AI image generator to display actual symbols and letters in an image. They might be posters, signboards, or logos that are not rendered properly or as intended.

3) Source of the image

A simple reverse image search using Google Lens, Tineye, Yandex, Bing might help in finding the source of the image. It’s also a good idea to trace comments on social media or even look for watermarks which might reveal the originator of the post.

However, not all AI generators place a watermark on image. Some give the option to, while some don’t. Keeping an eye on this detail could prove to be helpful. It must also be noted that it’s easy to crop out the watermark before sharing them. Here’s an example of a quick fact-check of a fake viral image that carried a ‘FaceApp’ watermark.

4) Examine the title and description

Not everyone discloses the use of AI when posting images, but some do, and that information could be in a post’s title, description or comments section. Besides those three places, you can check out the author's profile page for clues. You could also look using keywords like “Midjourney, DALL-E, and DreamStudio.” These are some of the apps used for creating images.

5) Pay attention to the background

The background of AI-generated images may appear to be blurred and deformed. AI-generated images may repeat the background, there might not be any well-defined edges, the objects may merge into one another.

In this Twitter thread, Bellingcat digital investigator Nick Waters debunked a viral image that claimed to show an explosion near the White House. In this the cars in the foreground had no proper shape, an example of incomplete rendering of objects by AI image generators.

In May 2023, Google announced introducing a new tool called ‘About this image’ to add more context to its search results. This tool will first be rolled out in English in the U.S.

“We will ensure that every one of our AI-generated images has a markup in the original file to give you context if you come across it outside of our platforms. Creators and publishers will be able to add similar markups, so you’ll be able to see a label in images in Google Search, marking them as AI-generated,” the blogpost by Google read.

Social media platforms are more likely to have AI-generated images that are designed to go viral. Character artist Del Walker makes a case for ‘why context is important’ before getting into noticing minute details such as fingers, background objects, and other elements to confirm whether an image is generated by AI.

“Midjourney's AI can now do hands correctly. Be extra critical of any political imagery (especially photography) you see online that is trying to incite a reaction,” Walker wrote.

You could also try these free tools that immediately let you know how likely the images you’re looking at are AI-generated. Although there are several GAN detectors, only some are free to use. But AI technology is evolving rapidly and can be expected to become more sophisticated and harder to detect in the future. Always have a healthy level of skepticism when it comes to online content, and stay curious!