By: Ankita Kulkarni

October 4 2024

Logically Facts analyzes the measures announced by social media platforms ahead of the U.S. elections in 2024. (Source: X/ @Vice President Kamala Harris/Logically Facts)

Logically Facts analyzes the measures announced by social media platforms ahead of the U.S. elections in 2024. (Source: X/ @Vice President Kamala Harris/Logically Facts)

The 2020 presidential elections in the United States led to a violent attack on Capitol Hill, the official seat of the U.S. government. An attack, many say, social media was partially responsible for. This year, social media platforms face a big test — whether they can limit the spread of political misinformation ahead of the November polls, as incumbent Vice President and Democratic candidate Kamala Harris goes against Republican candidate and former president Donald Trump.

In the run up to the race, social media platforms announced measures along with new policies on AI content detection, transparency in political ads, and protecting users' discretion to discuss and post on politics. Logically Facts takes a closer look at what Meta, X (formerly Twitter), TikTok, and YouTube have announced.

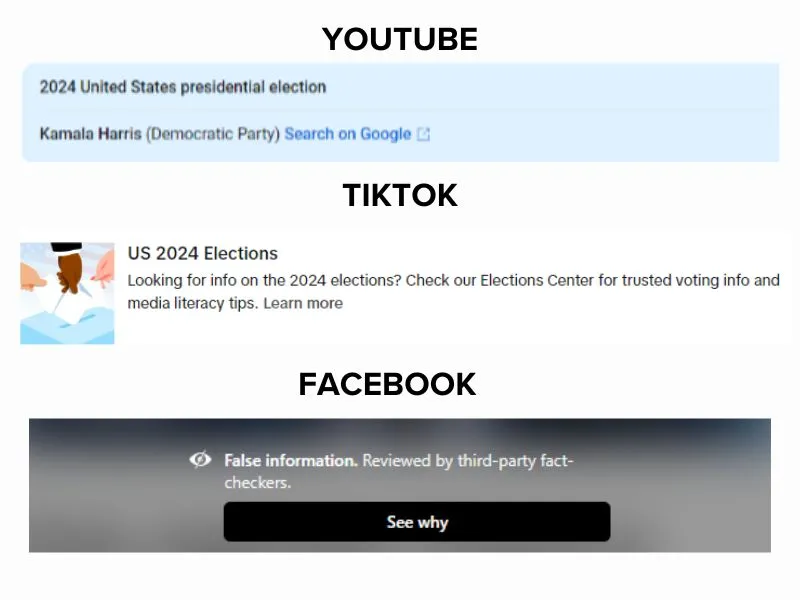

Ahead of the U.S. elections, Meta, TikTok, and YouTube have committed to a prominent display of links to official information from government websites such as vote.gov, usa.gov, and the United States Assistance Election Commission, on the voting and registration process if a user searches for terms related to the 2024 elections. X announced in August 2023 that it will label posts that suppress voter participation or mislead people about when and where to vote during an election.

TikTok and Meta continue to partner with multiple third-party fact-checking organizations to label posts containing election mis-and disinformation. YouTube also includes information panels to provide additional context to videos.

Screenshots showing an information panel on YouTube, TikTok directing users to trustworthy voting information and a labeled post on Facebook. (Source: YouTube/TikTok/Facbook)

Screenshots showing an information panel on YouTube, TikTok directing users to trustworthy voting information and a labeled post on Facebook. (Source: YouTube/TikTok/Facbook)

X meanwhile, depends heavily on community notes to add labels to misleading posts or misinformation, where registered contributors can express differing views to provide additional context to posts. However, an analysis by Logically Facts discovered that disagreements among raters delays adding context to crucial context particularly during elections as found amid polls in India and the U.K. earlier this year.

Social media platforms sometimes take down posts as a measure to combat mis- and disinformation, which a 2021 survey done by the University of South Florida found a majority of participants supported. But removal or labeling may not be having its intended impact, experts say, with several issues in how social media platforms combat misinformation.

Platforms like Meta exempt politicians' posts from being fact-checked. Meanwhile, misinformation is burgeoning on X despite a community-based approach towards content moderation gaining some confidence.

But American investigative journalist Jesselyn Cook says fact-checking labels have largely been ineffective. “I think it almost elicits a response that big tech is trying to control the truth. Community notes on X have more positive responses since they are generated by the community, but there are exceptions.”

A study by the Carnegie Endowment, a global think tank, on “Countering Disinformation Effectively: An Evidence-Based Policy Guide” noted that labeling false content or providing additional context can reduce the likelihood of users believing it and sharing it.

Another study by Harvard University published in 2022 observed fact-checks improved voters' factual understanding and confidence in the integrity of the 2020 U.S. election; having no influence on voting behavior. Additionally, a recent survey by Axios Vibes revealed that most Americans are worried about politicians spreading misinformation rather than foreign interference or AI content.

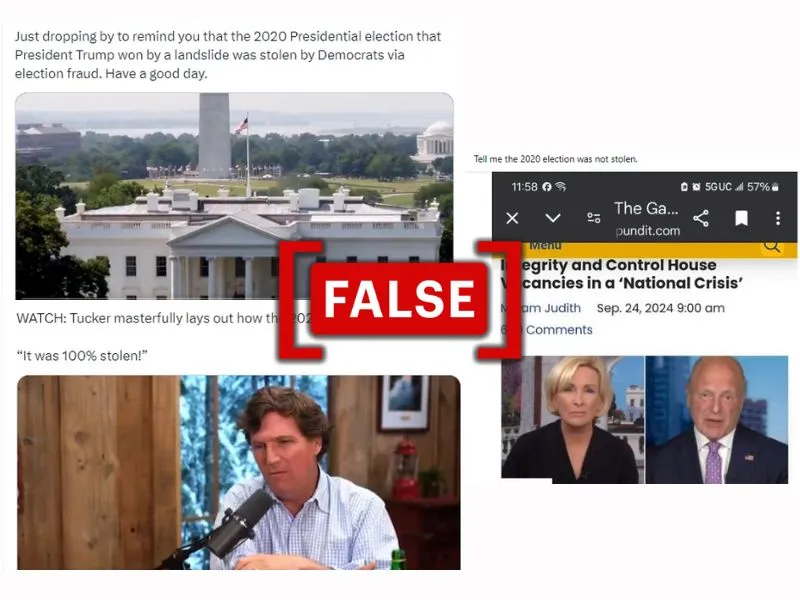

As of September 30, Logically Facts was still able to identify several posts claiming 2020 U.S. elections were rigged despite being widely debunked.

Screenshots of social media posts 2020 U.S. elections were rigged.

Screenshots of social media posts 2020 U.S. elections were rigged.

An analysis by the New York Times, AP News, and British-American nonprofit organization Center for Countering Digital Hate (CCDH) noted multiple posts on X by owner Elon Musk on election misinformation circulated without any community notes.

Although claims made by Musk were debunked by fact-checkers, they still managed to surface. For instance, Musk amplified the claim that “Democrats are importing illegal voters” to win the elections. However, the claim has been debunked multiple times, clarifying that it takes a long time for immigrants to become U.S. citizens to be able to vote in elections, and migrants admitted to the country on parole only have a work permit for a limited period.

With AI-generated content surging on social media as campaigns for the elections intensify, YouTube, TikTok, X and Meta have all rolled out updates to add visible markers to label AI content, and have mandated a disclaimer be added to any political ad if it was created or altered using AI tools.

However, Meta recently updated its policy on labeling AI information, noting that the labels will no longer be visible on the image but will be moved to the list of options under the menu section of a post. This update can make it difficult for users to identify AI-generated content compared to previously, wherein the label was visible on the image when users viewed such content.

AI-generated content is specially in focus since it has been revealed that Grok, an AI chatbot developed by Musk-founded xAI, could generate images of ballot box stuffing and spread misinformation about election candidature. CCDH has raised concerns about Grok's weak guardrails and its potential to create misleading election content.

Earlier this year, in January 2024, a fake robocall mimicking President Joe Biden went viral, urging New Hampshire voters to skip the U.S. Presidential Primary, which highlighted the forthcoming election era of AI-generated content. A report by a non-profit organization in the U.S. Protect Democracy notes that advancements in AI technology could make the existing threats worse.

For example – it can exacerbate voter suppression, and generative AI could burden the election administrators with overwhelming requests asking for evidence on claims regarding election fraud and those requests can be sent using AI at an “unprecedented scale”.

“Many companies have partnerships with fact-checkers and efforts to downrank false content. The biggest challenges today are detecting and labeling AI-generated content,” Katie Harbath, founder and CEO of technology policy firm Anchor Change and Former Public Policy Director of Global Elections at Meta, told Logically Facts.

However, some experts also say AI’s impact on elections is being exaggerated, with research suggesting it didn’t affect results in the “year of elections.”

Meta, X, and YouTube have stricter policies on political ads and prohibit videos that can manipulate voters. While Meta has asked advertisers to disclose the funding source by adding a "paid for by" disclaimer, advertisers on YouTube and X have to undergo a verification process before running ads on the platform. TikTok’s policies meanwhile, have disallowed paid political marketing, and accounts of political parties and politicians cannot advertise on the platform.

Politico reported that in the 2020 U.S. elections, Facebook pages that displayed political ads had escaped the platform's scrutiny and “bought ads aimed at swaying potential voters.”

A 2022 study by Global Witness found that TikTok approved 90 percent of ads containing false and misleading information on elections. Facebook was partially effective, while YouTube succeeded in detecting and suspending problematic ads.

Earlier this year, Logically Facts analyzed Meta’s ad library data to find that during the general elections in India, some Facebook pages shared misleading posts and spent millions to increase their reach and engagement, and not all were taken down. Internet Freedom Foundation’s Executive Director Prateek Waghre had then told Logically Facts, “The degree of responsibility on platforms is even higher in the context of ads.” He added that platforms should respond effectively when motivated actors exploit loopholes like satire to evade content moderation.

Following the 2021 January Capitol insurrection, Meta announced that it would deprioritize political content for users. However, the latest update, issued September 2024, states that users can now change this default setting and choose to see political content from accounts they follow and don't follow.

While it has other regulations in place, YouTube updated its policy in June 2023, stating that it would stop removing content with false claims about 2020 and other past U.S. presidential elections to support “open political debate.” It is to be noted that this comes despite a 2022 study by New York University which found that YouTube recommended videos of election fraud to people who were already skeptical about the legitimacy of the 2020 U.S. election.

A.J. Bauer, Assistant Professor at the University of Alabama feels divided about this. “On one hand, the Trump campaign's continued misinformation about the 2020 election can make people less trustworthy of the current elections,” he said. “On the other hand, those videos can help historians and journalists to contextualize reporting on current elections, which is probably good in the long run.”

Cook views the move as YouTube trying to navigate criticism from both left and right ideologies. “I think YouTube was taking a more neutral approach, which is not advised by people who study these issues deeply.”

Commenting on the emerging threat of AI, Director of Cornell University’s Security, Trust, and Safety Initiative Alexios Mantzarlis told Logically Facts that he worries about “deceptive evidence creation in the support of misinformation” and deepfake audio.

“The most important is a clear and public definition of election misinformation—specifically about the voting process and results—and their procedures to flag it, slow it down, and, where appropriate, take it down,” he said.

Cook, on the other hand, emphasized on bolstering and supporting “credible journalism” through algorithmic promotions. “Instead of banning content, platforms should focus on transparent, expert-informed policies to determine what content is promoted or monetized.”

(Edited by Sanyukta Dharmadhikari and Ilma Hasan)

(Click on the link to follow our coverage of 2024 U.S. elections)

(Editor’s Note: Logically Facts is a part of Facebook’s third-party fact-checking program in the U.K. and TikTok's fact-checking programme in Europe)