By: Rahul Adhikari

February 14 2024

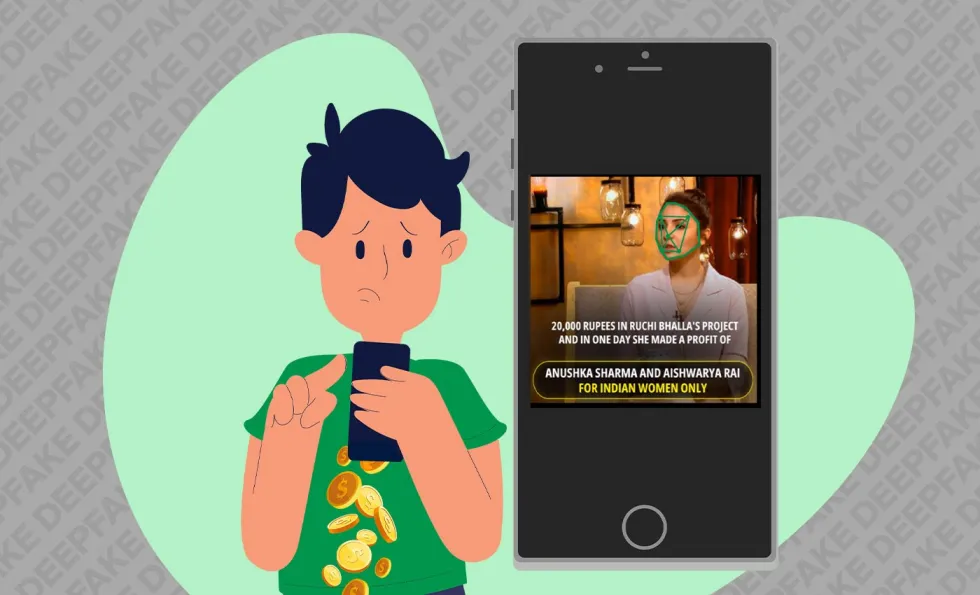

Deepfakes are now being utilized for financial scams and frauds, leaving a trail of victims who have lost large amounts of money to scammers. (Source: Freepik.com)

Deepfakes are now being utilized for financial scams and frauds, leaving a trail of victims who have lost large amounts of money to scammers. (Source: Freepik.com)

What do Bollywood actors Aishwarya Rai Bachchan, Anushka Sharma, and Shilpa Shetty, Indian industrialist Ratan Tata, and Isha Foundation founder Jaggi Vasudev, also known as Sadhguru, have in common? They are all targets of deepfakes used to promote fraudulent money-making schemes on Telegram.

According to the "2023 State of Deepfakes Report" by Home Security Heroes, a U.S.-based web security services company, deepfake videos have increased fivefold since 2019. In India, the latter half of 2023 witnessed a surge in deepfakes targetting Bollywood actors — Alia Bhatt, Kajol, Rashmika Mandanna, and Katrina Kaif — by convincingly altering their videos with sexual undertones.

The creation, use, and dissemination of such AI-generated deepfakes have risen in India, with financial fraud being a major consequence of the increasing accessibility of AI technology.

Earlier this year, Indian cricketer Sachin Tendulkar flagged a viral deepfake video of himself that appeared to show him praising an online gaming app as a quick way to make money. Logically Facts has fact-checked several such deepfake videos of Tesla CEO and X owner Elon Musk and Infosys co-founder N.R. Narayana Murthy endorsing a dubious 'investment platform' called Quantum AI, circulating on Facebook.

Similarly, a network of deepfakes on Facebook is being used to lure unsuspecting individuals into fraudulent Telegram channels. These videos feature glowing testimonies from prominent personalities, use audio similar to their real voices, and nearly synchronize the personalities' lip movements with the audio.

Similar deepfake videos were published from several accounts promoting different Telegram channels. (Source: Facebook/Modified by Logically Facts)

These videos, deployed as sponsored content and advertisements on Facebook, feature a celebrity discussing someone running an investment project and are linked to a Telegram channel in that individual's name. Once unsuspecting users hoping to make quick money join, the channel administrators employ deceptive tactics to defraud them.

Adelize van Eeden, a Vision AI Engineer at Logically, expressed concern that, as deepfake detection techniques improve to the point where they can reliably identify deepfakes, creators are developing more sophisticated models capable of generating even more convincing deepfakes.

However, van Eeden outlined methods that could help raise awareness about deepfake content. "It's crucial to inform the public about how convincing these deepfakes can be and how difficult they are to identify. We should provide examples of image, audio, and video deepfakes, along with case studies of how individuals have already been deceived and scammed by deepfakes," she explained.

She further emphasized the importance of encouraging people to double-check and verify content they suspect might be fake and to share publicly available tools that can help detect AI-generated media.

One of the most viral videos we found as part of this trend we uncovered features a deepfake of Sadhguru and an India Today anchor, promoting the Telegram channel and investment fund of 'Suraj Sharma'. A video promoting Sharma has garnered over 250,000 views on Instagram and more than 600 comments.

The videos are run as sponsored advertisements from a Facebook page named "Suraj Sharma - Indian Mentor." Our review of the ad library of the page revealed several advertisements using similar deepfakes of Sadhguru promoting Sharma.

Screenshot of the deepfake videos in the ad library of the Suraj Sharma - Indian mentor Facebook book. (Source: Facebook)

Meanwhile, a deepfake video of Ratan Tata promotes the Telegram channel of one ‘Laila Rao’, whom he claims is his manager, encouraging people to join the Telegram channel, invest a minimum amount, and get rewarded with a larger sum. In December 2023, Tata took to Instagram to denounce the video as fake.

Deepfake videos of Tata and Sadhguru promoting Laila Rao’s channel. (Source: Screenshots/Facebook)

Similar deepfake videos seen on Facebook of India Today and Sadhguru are also being used to promote Rao's Telegram channel and another channel named ‘Sona Agarwal.’ The Isha Foundation has issued a notice clarifying that Sadhguru's videos, images, and cloned voices were being shared to run financial scams in the names of Suraj Sharma, Laila Rao, and Amir.

Isha Foundation has clarified that the videos used by these pages are not real. (Source: Isha Foundation/Screenshot)

We also found deepfake videos featuring Anushka Sharma and Aishwarya Rai Bachchan, as well as one featuring Shilpa Shetty, used to promote another Telegram channel named Ruchi Bhalla. Logically Facts had previously fact-checked the video of Sharma and Rai Bachchan, tracking down the original videos, which had no link to any investment opportunities.

Screenshots of deepfake videos of Bollywood actors Anushka Sharma, Aishwarya Rai Bachchan, and Shilpa Shetty, that were used to promote Ruchi Bhalla’s Telegram channel. (Source: Facebook/Screenshot)

Upon close examination, these videos often reveal signs of digital manipulation, such as inconsistencies in spelling, variations in the pronunciation of the same name, unexpected accents, a lack of voice modulation, and, most notably, unnatural movements of the mouth and lips. But despite these signs, the enticing nature of the announcements in the videos seems to override any suspicions about their legitimacy.

We found four Telegram channels linked with the deepfake videos named after Suraj Sharma, Laila Rao, Sona Agarwal, and Ruchi Bhalla, all with over 10,000 subscribers. On Telegram, we found multiple channels using these names, with several boasting significant subscriber counts.

Telegram channels linked to Suraj Sharma, Laila Rao, Sona Agarwal, and Ruchi Bhalla. (Source: Telegram/Modified by Logically Facts.)

Of the six identical channels linked to Sharma, several have over 20,000 subscribers, while one has 120,331 subscribers. All the channels have the same photo of a man as their display pictures, purported to be Sharma, and carry photos of his supposed lavish lifestyle. However, the images and videos used in the channel are taken from the Instagram account of a lifestyle vlogger named Arslan Aslam, who has over 1.8 million followers on Instagram.

Screenshots of the messages shared in the Telegram channels. (Source: Telegram)

Image comparison between the images from the Telegram channels and Arslan Aslam’s images posted on Instagram.

Five out of the six channels responded to inquiries from Logically Facts — two via WhatsApp and three via Telegram. While the Telegram contact showed both their names as Suraj Sharma, when asked about identity, one WhatsApp contact identified himself as Sagar and another as Vijay. These individuals provided bogus identification and promised up to 5x returns on investments. They provided different UPI IDs for payment and claimed the funds would be used for Bitcoin investment.

When Logically Facts inquired about the deepfake videos and the misattributed visuals, one person blocked us, while others either denied posting such videos or stopped responding.

Further investigations into the other channels revealed that Indian television actor Smriti Khanna was falsely portrayed as Laila Rao, images, and videos of 'Sona Agarwal' on the channels were actually of fashion and lifestyle blogger Sukhneett Wadhwa, and Dubai-based lifestyle vlogger Hanaa Albalushi was portrayed as Ruchi Bhalla. Khanna has since issued a clarification on Instagram warning about such scams. All these channels engaged with users in a similar manner.

Screenshot of the clarification posted by Smriti Khanna. (Source: Instagram/Screenshot)

While the above points raise suspicion, they are not enough to label these channels as fraudulent. Logically Facts found several complaints lodged against these channels on the website of a non-profit NGO called Consumer Complaints Court. Based on just the complaints on this portal alone, people have lost at least Rs 24,000 to the Suraj Sharma Telegram channels, at least Rs 542,022 to the Sona Agarwal channels, at least Rs 494,600 to ‘Laila Rao’ and at least Rs 65,817 to ‘Ruchi Bhalla.’

Screenshots of conversations with these channels uploaded on the Consumer Complaints Portal suggest a pattern of fraud: after receiving an initial payment, the channel owners claim the investment has generated a large profit. However, to receive the money, customers are told they must pay more money and give the channel owners a commission. Even after complying, the channels allegedly requested more money, resulting in significant financial losses for individuals.

Screenshots of conversations with these channels were uploaded on the Consumer Complaints Portal. (Source: Consumer Complaint Court/Screenshot)

Logically Facts spoke to one such victim, Ifrat Ara Jahan, a law student in Kolkata, who alleged that she had been duped by a ‘Laila Rao’ channel. Providing screenshots of her transactions and conversation, she said, “I initially invested Rs 2,000. Later, I was asked to pay an additional Rs 3,000 to get the money back. I paid the money, and they then asked for Rs 5,000 more. I did that, too, and then they asked for more money. Every time they gave me a different UPI ID for payment. That’s when I started asking questions, and they eventually deleted the Telegram account where I was communicating with them. I lost Rs 10,000 in total.”

Screenshots of payments and deleted conversations. (Source: Screenshots/Provided to Logically Facts)

Shruti Shreya, Senior Programme Manager - Platform Regulation, Gender & Tech, at The Dialogue, emphasized that the responsibility to protect users from harm lies with social media platforms. “Rule 31D of the IT act outlines 10 categories of online harm, imposing a duty on platforms to regularly inform users about these risks. Platforms must remind users of these harms, conduct due diligence, and promptly remove content falling into any category. Misinformation and privacy breaches are among these categories, and deepfakes, created with the intent to deceive, can be classified under either.”

“Platforms must regularly inform users about these harms, and upon user reports of deepfake content, they must acknowledge within 24 hours and resolve within 72 hours. Serious issues require a 72-hour resolution timeline,” Shreya added.

Meta, recently encouraged to reconsider its manipulated media policy after permitting an altered video of U.S. President Biden to remain on Facebook, announced it would label AI-generated video or audio based on disclosures by the authors of such posts and apply penalties for non-compliance. For high-risk matters, Meta stated it might “add a more prominent label if appropriate, so people have more information and context.”

Telegram, WhatsApp, and Meta did not respond to requests for comment from Logically Facts at the time of publishing.

Despite social media policies, India has laws to protect individuals and steps for redressal. Karnnika A. Seth, a cyberlaw expert and advocate at the Supreme Court of India, mentioned that Section 465 of the IPC prescribes punishment for forgery, including a fine, while Sections 66C and 66D provide punishment for identity theft and cheating by personation, and Section 420 makes cheating a punishable offense. She added that “specific laws addressing AI and fake news are expected under the Digital India Act, set to replace the IT Act 2000.”

“Victims must report it to cybercrime.gov.in and 1930 helpline. They can file a complaint and seek compensation,” Seth added.

Shreya also noted that if defrauded, users can complain through an app reporting and grievance officers forum, appealing to the Grievance Appellate Committee (GAC) if necessary.

Seth pointed out that while the EU is passing the AI Act and the U.S. has the Deep Fake Accountability Act, which mandates disclosures regarding AI-generated deepfakes to protect people from deception and harm, it's crucial for India to enact stringent laws ensuring transparency and disclosures in deepfake creation. “Strict penalties should be in place for the irresponsible use of technology to commit offenses and harm the nation or its people,” she said.

She emphasized the need for a new Digital India Act, as the current IT Act does not adequately address new challenges. She highlighted that existing channels are not being fully utilized and awareness is low. Apart from enacting laws, other aspects of addressing this issue should be explored, and the implementation of existing laws is also crucial.

(Edited by Shreyashi Roy & Nitish Rampal)

(Disclaimer: Founded in 2017, Logically combines artificial intelligence with expert analysts to tackle harmful and manipulative content at speed and scale. The company has offices in the UK, US, and India. For more information, please visit Logically's website. Logically Facts is an independent subsidiary of Logically.)